#12 - Towards World Supercomputer

A New Paradigm for Hyperscaling Decentralized Execution

Stanford Blockchain Review

Volume 2, Article No. 2

📚 Authors: Xiaohang Yu, Kartin, msfew – Hyper Oracle,

Qi Zhou – ETHStorage

🌟 Technical Prerequisite: Advanced

Introduction

From Bitcoin’s peer-to-peer consensus algorithm to Ethereum’s EVM to the idea of a Network State, one of the longstanding goals of the blockchain community is to build a world supercomputer, or more specifically, a unified state-machine that is decentralized, unstoppable, trustless, and scalable. While it has been known for a long time that all this is very theoretically possible, thus far, most of the ongoing efforts are very piecemeal and have severe tradeoffs and limitations. Within this paper, we will explore some of the tradeoffs and limitations that existing attempts to build a world computer have faced, before analyzing the necessary components for such a machine, and finally proposing a novel architecture for a world supercomputer.

1. Current Approach Limitations

a) Ethereum and L2 Rollups

Ethereum is the first real - and arguably the most successful - attempt to build a world supercomputer. Yet throughout its development, Ethereum has greatly prioritized decentralization and security over scalability and performance. Thus, while reliable, vanilla Ethereum is a far cry from a world supercomputer - it is simply not scalable.

The current go-to solution for all this is L2 rollups, which have emerged as the most widely adopted scaling solution for enhancing the performance of Ethereum's world computer. As an additional layer built on top of Ethereum, L2 rollups offer significant benefits and have been embraced by the community.

While there exist several definitions of L2 rollups, the general consensus is that an L2 rollup is a network that has the two key traits of on-chain data availability, and off-chain transaction execution for Ethereum or some other base network. Essentially, there is public accessibility of historical state or input transactions data, and verification of commitment on Ethereum, but all the individual transactions and state transitions are taken off the mainnet.

While L2 rollups do, in fact, greatly enhance the performance of these “global computers,” many of them pose systemic risks of centralization [1] that fundamentally undermines the principles of blockchains as decentralized networks. This is because off-chain executions involve not only single state transitions, but also the sorting or batching of these transactions. In most cases [2], L2 sequencers do the sorting, while L2 validators compute the new state. Providing L2 sequencers with this sorting power, however, creates a centralization risk, where centralized sequencers can abuse their power to arbitrarily censor transactions, compromise network liveness, and profit from MEV capture.

Although there have been many discussions on reducing this L2 centralization risk, such as through shared, outsourced, or based sequencer solutions [3] tradeoff-based solutions, or decentralized sequencer solutions (such as PoA, PoS leader selection, MEV auctions, and PoE [4]), many of these attempts are still in the conceptual design stage and are far from a panacea to this problem [5]. Moreover, many L2 projects appear to be reluctant to implement decentralized sequencer solutions. Arbitrum, for example, has suggested that a decentralized sequencer may become an optional feature [6]. In addition to the centralized sequencer issue, L2 rollup may have centralization problems from high full node hardware requirements, governance risks, and the app-rollup trends, which we won’t discuss in detail.

b) L2 Rollups and the World Computer Trilemma

All these centralization issues from relying on L2s to scale Ethereum expose a fundamental problem, the “world computer trilemma” as derived from the classic blockchain “trilemma”:

Different priorities in this trilemma will result in different trade-offs:

Strong Consensus Ledger: Inherently requires repetitive storage and computation, and therefore is not suitable for scaling storage and computation.

Strong Computation Power: Needs to reuse consensus while performing a lot of computation and proof tasks, and is therefore not suitable for large-scale storage.

Strong Storage Capacity: Needs to reuse consensus while performing frequent random sampling proofs of space, so it is not suitable for computation.

The traditional L2 scheme does, in fact, build the world computer in a modular way. However, because the different functions are not partitioned based on the aforementioned priorities, even with the extension, the world computer remains the original mainframe architecture [7] of Ethereum. This architecture cannot satisfy other features such as decentralization and performance, and cannot solve the trilemma of the world computer.

In other words, L2 rollups effectively achieves the following:

Modularization [8] of the World Computer (more experimentation on the consensus layer with some external trust on centralized sequencers)

Throughput Enhancement of the World Computer (though it is not strictly "scaling" [9])

Open Innovation of the World Computer

However, L2 rollups don’t provide:

Decentralization of World Computer

Performance Enhancement of the World Computer (the maximum TPS of rollups added up is actually not enough [10], and an L2 cannot have faster finality than L1 [11])

Computation of World Computer (which involves computations beyond transaction processing like machine learning and oracles [12])

While the world computer architecture can have L2s and modular blockchains, it does not address the fundamental issue. An L2 can solve the blockchain trilemma, but not the trilemma of the world computer itself. Thus, as we can see, the current approach is insufficient to truly implement the decentralized world supercomputer that Ethereum initially envisioned. We need performance scaling with decentralization, as opposed to incremental decentralization [13] with performance scaling.

2. Design Goals of a World Supercomputer

To this end, we need a network that solves truly general-purpose intensive computing (especially machine learning and oracles), while preserving the full decentralization of the base layer blockchain. Furthermore, we must ensure that the network is capable of supporting high-intensity computations such as machine learning (ML), which can run directly on the network and eventually be verified on the blockchain. Additionally, we need to provide ample storage and computing power on top of the existing World Computer implementation, with the following goals and design methods:

a) Computation Demand

To meet the demand and purpose of a world computer, we expand on the concept of a world computer as described by Ethereum and aim for a World Supercomputer.

World Supercomputer first and foremost needs to do what computing can do today and in addition in a decentralized manner. In preparation for large-scale adoption, developers, for instance, require the World Supercomputer to accelerate the development and adoption of decentralized machine learning for running model inference and verification.

Large models, like MorphAI [14], will be able to use Ethereum to distribute inference tasks and verify the output from any third party node.

In the case of a computationally resource-intensive task like machine learning, achieving such an ambition requires not only trust-minimized computation techniques like zero-knowledge proofs but also larger data capacity on decentralized networks. These are things that cannot be accomplished on a single P2P network, like classical blockchain.

b) Solution for Performance Bottleneck

In the early development of computers, our pioneers faced similar performance bottlenecks in computers as they made trade-offs between computational power and storage capacity. Consider the smallest component of a circuit as an illustration.

We can compare the amount of computation to lightbulbs/transistors and the amount of storage to capacitors. In an electrical circuit, a lightbulb requires current to emit light, similar to how computational tasks require computational volume to be executed. Capacitors, on the other hand, store electrical charge, similar to how storage can store data.

There may be a trade-off in the distribution of energy between the lightbulb and the capacitor for the same voltage and current. Typically, higher computational volumes require more current to perform computational tasks, and therefore require less energy storage from the capacitor. A larger capacitor can store more energy, but may result in lower computational performance at higher computational volumes. This trade-off leads to a situation where computation and storage cannot be combined in some cases.

In the von Neumann computer architecture, it guided the concept of separating the storage device from the central processor. Similar to decoupling the lightbulbs from the capacitors, this can solve the performance bottleneck of the system of our World Supercomputer.

In addition, traditional high-performance distributed databases use a design that separates storage and computation. This scheme is adopted because it is fully compatible with the characteristics of a World Supercomputer.

c) Novel Architectural Topology

The main difference between modular blockchains (including L2 rollups) and the world computer architecture lies in their purpose:

Modular Blockchain [15]: Designed for creating a new blockchain by selecting modules (consensus, DA, settlement, and execution) to combine together into a modular blockchain.

World Supercomputer: Designed to establish a global decentralized computer/network by combining networks (base layer blockchain, storage network, computation network) into a world computer.

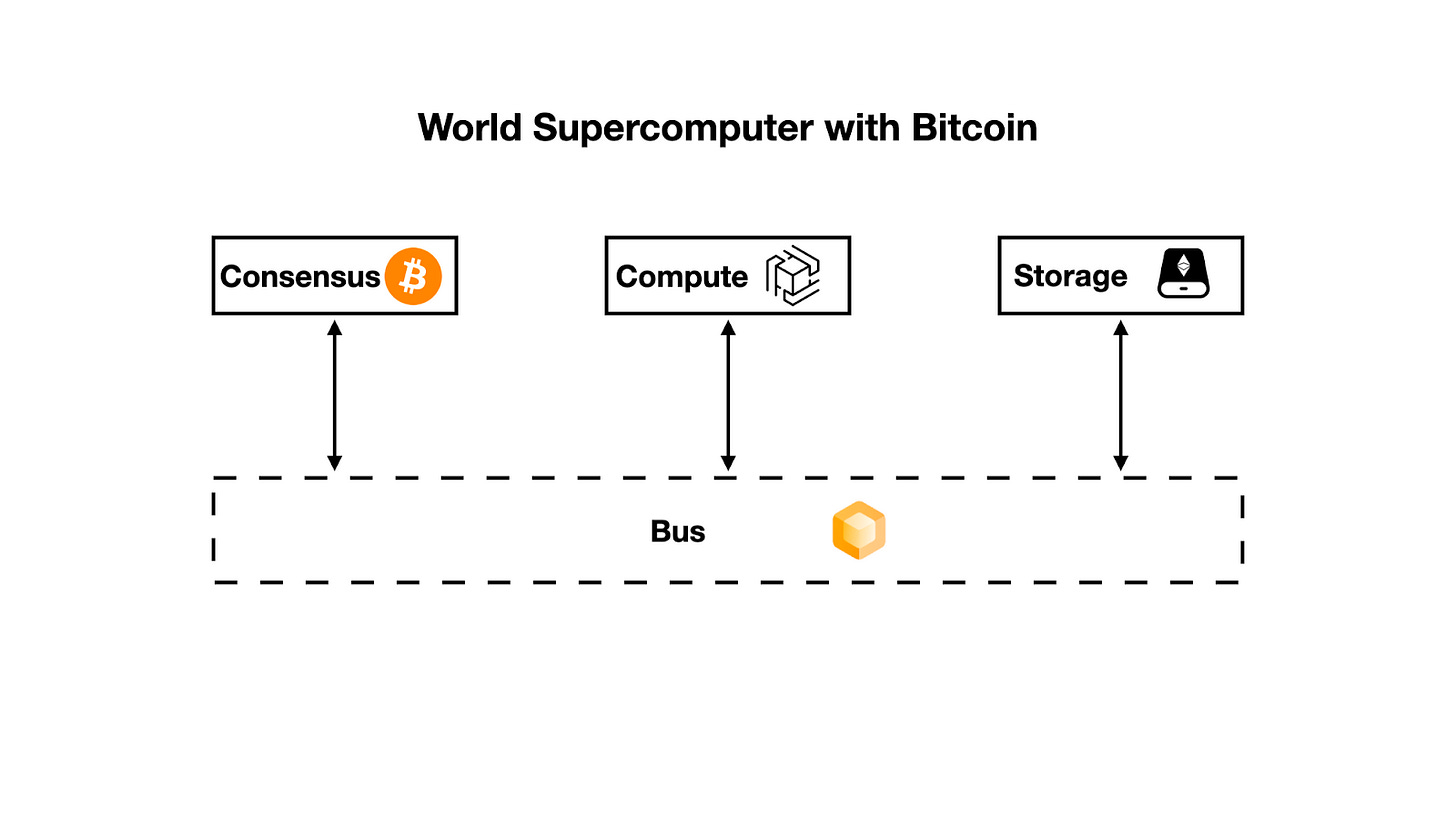

We propose another option next to modular Blockchains and L2s. The final World Supercomputer will be made up of three topologically heterogeneous P2P networks, connected by a trustless bus (connector) such as zero-knowledge proof technology: a consensus ledger, computation network, and storage network. This basic setup allows the World Supercomputer to solve the world computer trilemma, and additional components can be added as needed for specific applications.

It's worth noting that topological heterogeneity goes beyond just differences in architecture and structure. It also encompasses fundamental differences in topological form. For example, while Ethereum and Cosmos are heterogeneous in terms of their layers of network and internet of networks, they are still equivalent in terms of topological heterogeneity (blockchains).

Within the World Supercomputer, a consensus ledger blockchain takes the form of a chain of blocks with nodes in form of complete graph, while a network like Hyper Oracle's zkOracle network is a ledgerless network with nodes in form of cyclic graph, and the network structure of storage rollup is yet another variation with partitions forming sub-networks.

We can have a fully decentralized, unstoppable, permissionless, and scalable World Supercomputer by linking three topologically heterogeneous peer-to-peer networks for consensus, computation, and storage via zero-knowledge proof as a data bus.

3. World Supercomputer Architecture

Similar to building a physical computer, we must assemble the consensus network, computation network, and storage network mentioned previously into a World Supercomputer.

Selecting and connecting each component appropriately will help us achieve a balance between the Consensus Ledger, Computing Power, and Storage Capacity trilemma, ultimately ensuring the decentralized, high-performance, and secure nature of the World Supercomputer.

The architecture of the World Supercomputer, described by its functions, is as follows:

The nodes of a World Supercomputer network with consensus, computation, and storage networks would have a structure similar to the following:

To start the network, World Supercomputer's nodes will be based on Ethereum's decentralized foundation. Nodes with high computational performance can join zkOracle's computation network for proof generation for general computation or machine learning, while nodes with high storage capacity can join EthStorage's storage network.

The above example depicts nodes that run both Ethereum and computation/storage networks. For nodes that only run computation/storage networks, they can access the latest block of Ethereum or prove data availability of storage through zero-knowledge proof-based buses like zkPoS and zkNoSQL, all without the need for trust.

a) Ethereum for Consensus

Currently, World Supercomputer's Consensus Network exclusively uses Ethereum. Ethereum boasts a robust social consensus and network-level security that ensures decentralized consensus.

World Supercomputer is built on a Consensus Ledger-centered architecture. The consensus ledger has two main roles:

Provide consensus for the entire system

Define the CPU Clock Cycle with Block Interval

In comparison to a computation network or a storage network, Ethereum cannot handle huge amounts of computation simultaneously nor store large amounts of general-purpose data.

In World Supercomputer, Ethereum is a consensus network that stores data availability such as L2 rollup, reaches consensus for computation and storage networks, and loads critical data so that the computation network can perform further off-chain computations.

b) Storage Rollup for Storage

Ethereum's Proto-danksharding and Danksharding are essentially ways to expand the consensus network. To achieve the required storage capacity for the World Supercomputer, we need a solution that is both native to Ethereum and supports a large amount of data storage persists forever.

Storage Rollups, such as EthStorage, essentially scale Ethereum for large-scale storage. Furthermore, as computationally resource-intensive applications like machine learning require a large amount of memory to run on a physical computer, it's important to note that Ethereum's “memory” cannot be aggressively scaled. Storage Rollup is necessary for the "swapping" that allows the World Supercomputer to run compute-intensive tasks.

Additionally, EthStorage provides a web3:// access protocol (ERC-4804 [16]), similar to the native URI of a World Supercomputer or the addressing of resources of storage.

c) zkOracle [17] Network for Computation

The computation network is the most important element of a World Supercomputer, as it determines the overall performance. It must be able to handle complex calculations such as oracle or machine learning, and it should be faster than both consensus network and storage network in accessing and processing data.

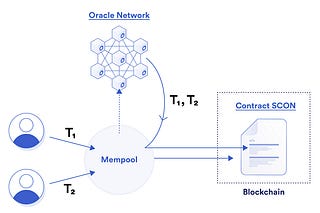

zkOracle Network is a decentralized and trust-minimized computation network that is capable of handling arbitrary computations. Any running program generates a ZK proof, which can be easily verified by consensus (Ethereum) or other components when in use.

Hyper Oracle, a zkOracle Network, is a network of ZK nodes, powered by zkWASM and EZKL, which can run any computation with the proof of execution traces.

A zkOracle Network is a ledgerless blockchain (no global state) that follows the chain structure of the original blockchain (Ethereum), but operates as a computational network without a ledger. The zkOracle Network does not guarantee computational validity through re-execution like traditional blockchains; rather it gives computational verifiability through proofs generated. The ledger-less design and dedicated node setup for computing allow zkOracle Networks, like Hyper Oracle, to focus on high-performance and trust-minimized computing. Instead of generating new consensus, the result of the computation is output directly to the consensus network.

In a computation network of zkOracle, each compute unit or executable is represented by a zkGraph. These zkGraphs define the computation and proof generation behavior of the computation network, just like how smart contracts define the computation of the consensus network.

I. General Off-chain Computation

The zkGraph programs in zkOracle's computation can be used for two major cases without external stacks:

indexing (accessing blockchain data)

automation (automate smart contract calls)

any other off-chain computation

These two scenarios can fulfill the middleware and infrastructure requirements of any smart contract developer. This implies that as a developer of a World Supercomputer, you can undergo the entire end-to-end decentralized development process, which includes on-chain smart contracts on the consensus network as well as off-chain computation on the computation network, when creating a complete decentralized application.

II. ML/AI Computation

In order to achieve Internet-level adoption and support any application scenario, World Supercomputer needs to support machine learning computing in a decentralized way.

Also through zero-knowledge proof technology, machine learning and artificial intelligence can be integrated into World Supercomputer and be verified on Ethereum's consensus network to be truly on-chain.

zkGraph can connect to external technology stacks in this scenario, thus combining zkML itself with World Supercomputer's computation network. This enables all types of zkML applications [18]:

User-privacy-preserving ML/AI

Model-privacy-preserving ML/AI

ML/AI with Computational Validity

To enable the machine learning and AI computational capabilities of World Supercomputer, zkGraph will be combined with the following cutting-edge zkML technology stack, providing them with direct integration with consensus networks and storage networks.

EZKL [19]: doing inference for deep learning models and other computational graphs in a zk-snark.

Remainder [20]: speedy machine learning operations in Halo2 Prover.

circomlib-ml [21]: circom circuits library for machine learning.

e) zk as Data Bus

Now that we have all the essential components of the World Supercomputer, we require a final component that connects them all. We need a verifiable and trust-minimized bus to enable communication and coordination between components.

For a World Supercomputer that uses Ethereum as its consensus network, Hyper Oracle zkPoS is a fitting candidate for zk Bus. zkPoS is a critical component of zkOracle, which verifies consensus of Ethereum via ZK, allowing Ethereum's consensus to spread and be verified in any environment.

As a decentralized and trust-minimized bus, zkPoS can connect to all components of World Supercomputer with very little verification computation overhead with the presence of ZK. As long as there is a bus like zkPoS, data can flow freely within the World Supercomputer.

When Ethereum's consensus can be passed from the consensus layer to the Bus as World Supercomputer's initial consensus data, zkPoS with state/event/transaction proofs can prove it. The resulting data can then be passed to the computation network of zkOracle Network.

In addition, for the storage network’s bus, EthStorage is developing zkNoSQL to enable proofs of data availability, allowing other networks to quickly verify that BLOBs have sufficient replicas.

f) Another Case: Bitcoin as Consensus Network

As is the case with many Layer 2 sovereign rollups, decentralized networks like Bitcoin can also serve as the consensus network underlying a World Supercomputer.

To support such a World Supercomputer, we need to replace the zkPoS bus, as Bitcoin is a blockchain network based on the PoW mechanism.

We can use ZeroSync [22] to implement zk as Bus for a Bitcoin-based World Supercomputer. ZeroSync is similar to "zkPoW" in that it synchronizes Bitcoin's consensus with zero knowledge proofs, allowing any computing environment to verify and obtain the latest Bitcoin state within milliseconds.

g) Workflow

Here's an overview of the transaction process in Ethereum-based World Supercomputer, broken down into steps:

Consensus: Transactions are processed and agreed upon using Ethereum.

Computation: The zkOracle Network performs relevant off-chain calculations (defined by zkGraph loaded from EthStorage) by quickly verifying the proofs and consensus data passed by zkPoS acting as a bus.

Consensus: In certain cases, such as automation and machine learning, the computation network will pass data and transactions back to Ethereum or EthStorage with proofs.

Storage: For storing large amounts of data (e.g. NFT metadata) from Ethereum, zkPoS acts as a messenger between Ethereum smart contract and EthStorage.

Throughout this process, the bus plays a vital role in connecting each step:

When consensus data is passed from Ethereum to zkOracle Network's computation or EthStorage's storage, zkPoS and state/event/transaction proof generate proofs that the recipient can quickly verify to get the exact data such as the corresponding transactions.

When zkOracle Network needs to load data for computation from storage, it accesses the addresses of data on storage from consensus network with zkPoS, then fetches actual data from storage with zkNoSQL.

When data from zkOracle Network or Ethereum needs to be displayed in the final output forms, zkPoS generates proofs for the client (e.g., a browser) to quickly verify.

Conclusion

Bitcoin has established a solid foundation for the creation of a World Computer v0 [23], successfully building a "World Ledger". Later on, Ethereum effectively demonstrated the "World Computer" paradigm by introducing a more programmable smart contract mechanism. With the aim of decentralization, the inherent trustlessness of cryptography, the natural economic incentives of MEV [24], the drive for mass adoption, the potential of ZK technology, and most importantly the need for decentralized general computation including machine learning, the emergence of a World Supercomputer has become a necessity.

The solution we propose will build the World Supercomputer by linking topologically heterogeneous P2P networks with zero-knowledge proofs. As the consensus ledger, Ethereum will provide the underlying consensus and use the block interval as the clock cycle for the entire system. As the storage network, Storage rollup will store large amounts of data and provide a URI standard to access the data. As the computation network, zkOracle network will run resource-intensive computations and generate verifiable proofs of computations. As the data bus, zero-knowledge proof technology will connect various components and allow data and consensus to be linked and verified.

About the Authors

Xiaohang Yu

Xiaohang Yu is a PhD candidate at Imperial College London, and Core Researcher of Hyper Oracle. Previously, he worked as a blockchain research lead in a cybersecurity firm.

Kartin

Kartin is Co-founder and CEO of Hyper Oracle. Previously, he worked at Google, and TikTok.

msfew

msfew (Suning Yao) studies Computer Science at New York University, and researches at Hyper Oracle. Previously, he worked at Foresight Ventures, Google, and UnionPay.

Qi Zhou

Qi Zhou is the founder of EthStorage and QuarkChain. He holds a PhD in Electrical and Computer Engineering from Georgia Institute of Technology.

We are also grateful to Dr. Cathie So from the Privacy & Scaling Explorations Team of the Ethereum Foundation and Daniel Shorr from Modulus Labs for reviewing the content of this article.

References

[1] https://mirror.xyz/msfew.eth/KYcN_mB03V6cpc1LiMrHjA206LQQJkoh4zeIUjtLiC8

[2] https://twitter.com/bkiepuszewski/status/1645422967315111936

[3] https://twitter.com/0xDinoEggs/status/1643252532674801667

[5] The Definitive Guide to Sequencing

[6] https://twitter.com/ChainLinkGod/status/1533618278538457088

[7] https://twitter.com/Galileo_xyz/status/1545823081049886727

[8] https://notes.ethereum.org/@vbuterin/serenity_design_rationale#The-Layer-1-vs-Layer-2-Tradeoff

[9] https://twitter.com/_prestwich/status/1284174486674083840

[10] https://twitter.com/monad_xyz/status/1643663169951236101

[11] What are Rollups

[12] https://ethresear.ch/t/a-not-quite-cryptoeconomic-decentralized-oracle/6453

[13] https://twitter.com/adrian_brink/status/1656202217442123778

[14] https://www.morphstudio.xyz/

[15]https://mirror.xyz/msfew.eth/3EqlfRRdRPAInmjwYvNLfcSnxe7fHN6EcVfEUGEsuiY

[16] https://eips.ethereum.org/EIPS/eip-4804

[17] https://ethresear.ch/t/defining-zkoracle-for-ethereum/15131/19

[18] https://www.canva.com/design/DAFgqqAboU0/4HscC5E3YkFRFk3bB64chw/view#6

[19] https://github.com/zkonduit/ezkl

[20] https://www.moduluslabs.xyz/

[21] https://github.com/socathie/circomlib-ml

[22] https://github.com/ZeroSync/ZeroSync

[23] https://coingeek.com/bitcoin-as-a-world-computer/

[24] https://twitter.com/colludingnode/status/1643352627898462210

crazy to imagine

nice