Stanford Blockchain Review

Volume 3, Article No. 3

📚Author: Daniel Shorr – Modulus Labs

🌟Technical Prerequisite: Moderate/Advanced

Special thanks to Peiyuan Liao and the Polychain Monsters team.

Introduction

Meet “zkMon”: the world’s 1st “zero-knowledge proven Generative Adversarial Network” NFT collection – an artist that makes cryptographically authenticated AI monsters.

Working closely with the Polychain Monsters team, we’ve created zkMon as an NFT collection that showcases eight endearing pixelated creatures, including squirrels, raccoons, and dragons. Using ZKPs, the zkMon AI model is now able to sign and authenticate every single piece of the collection directly on Ethereum! This gives owners a cryptographic guarantee that the zkMon they purchased must’ve come from the original Polychain Monsters art model.

Our artistic maestro is a reimagined SN-GAN, fine-tuned to perfection and woven into a zk-circuit, resulting in delightful pixel art. But you might wonder, why go through all this trouble? After all, people have been turning generative AI art into NFTs for ages — why do the work of verifying the underlying model itself? In this article, we will first explain the rationale behind creating verifying AI-art creations on-chain, before diving head-first into our technical implementation of zkMon.

NFTs and Authenticity

One of the fundamental promises of NFTs is, as its name suggests, “non-fungibility,” or its authenticity as a digital artifact. NFTs rely on the cryptographic security of public blockchain networks for properties like traceable provenance and ownership. This is what gives us the ability to “own” digital data, and by extension, gives them value. When it comes to AI generated art, however, the full extent of the provenance paradigm is currently unfulfilled.

Until zkMon, AI art must be created fully off-chain, then brought on-chain manually — despite the art generation process being digitally native. This means that, until now, outputs generated from an AI artist could never have been cryptographically linked to that original art model.

zkMon completes the NFT promise for AI art by “bringing the AI artist on-chain,” thereby giving the creation process itself cryptographic traceability, auditability, and as a consequence, complete provenance (of both ownership and asset). Using ZKPs, the zkMon AI model is now able to sign and authenticate every single piece of the collection directly on Ethereum! This gives owners a cryptographic guarantee that the zkMon they purchased must’ve come from the original Polychain Monsters art model.

Using public blockchain and its underlying cryptographic architecture as the ultimate record-keeper, we’re able to trace otherwise arbitrary and identical-looking packets of bytes through time. This gives the previously ethereal realm of digital information the crucial properties of uniqueness and traceable ownership provenance. That, in turn, enables the notion of scarcity, since digital goods are now distinct (both in digital ID and ownership provenance) and verifiably genuine. This scarcity and our common confidence in a digital ground truth is precisely what allows us to “own” digital assets [1].

As a concrete example, suppose our buddy Santiago is the proud owner of CryptoPunk #9159. We know that he owns THE CryptoPunk #9159, and not some screen-grabbed copycat, because we can clearly trace the history of #9159 all the way to its original construction/minting — a path which rely on Ethereum to record-hold and define this packet of bytes as distinguishable from any other. This is why NFT projects like CryptoPunks and Nouns, whose creation processes themselves are deeply woven into the narratives of their communities, choose to encode their underlying generation scripts in smart contracts.

Unlocking Infinite Expressivity

Of course, traceable provenance only pertains to transactions on blockchains. In other words, each time NFTs have to rely on off-chain input or dependencies, those portions are suspect to manipulation or failure. For many types of assets, there currently exists no robust way to address this issue (see: the oracle problem). After all, a conventional drawing ought to be created “off-chain”, before being manually brought into the blockchain environment through the minting process.

But the AI generation process is digitally native. From training to inference, there is no conceptual reason that the AI model itself cannot enjoy the benefits of having every one of its operations run on-chain — including superpowers like verifiable asset provenance (i.e. this output actually came from that model) or provable attribution and ownership.

This “ownership authentication” opens the door to truly decentralized, autonomous artists, as well as more egalitarian structures for acknowledgment/attribution and compensation for AI-generated results [2]. As on-chain computation abilities advance, this may open a whole new range of “authentic” AI-generated applications.

Regardless of whether zkMon itself as a collection engenders any interest in the broader market, we are nonetheless opening the doors to a new world. A world where, thanks to blockchain-based accountability, AI models can power robust new engines of creativity and organization. From prompting to auditing, powering DAOs to building AI-native economies, the experiment is really only just beginning.

Zero Knowledge Proofs x AI Generative Art

With all those implications in mind, let’s dive into the tech behind the scenes. To get started, we need to understand the basics behind Zero Knowledge Proofs (ZKPs), and AI generative art (specifically, GANs).

Zero-knowledge proofs are a computation integrity technology. To put it plainly, ZKPs prove that some computation was executed correctly (without risk of tampering). Though this alone doesn’t make ZK unique, the magic shows up in the compute overhead of verification. Specifically, verifying the correctness guarantees of a zero-knowledge proof is significantly easier than running the computation naively — a property often known as succinctness. In the case of a public blockchain (such as Ethereum) where computation is a scarce resource, if we can perform compute verification on-chain (as opposed to the original, compute-heavy operation), we can bring more sophisticated algorithms on-chain — all without giving up a single ounce of blockchain security.

On the other half of the equation, AI generative art combines the power of artificial intelligence with artistic expression. It begins with collecting a large dataset of visual data, typically a set of images, which serves as the training data for the AI. The model then analyzes and learns from this data, developing its own internal representation of artistic styles, colors, shapes, and other visual elements. Once trained, the AI model can generate new artwork by starting from a random vector acting as a seed, and slowly refining this internal representation until out pops a fully-fledged image.

GANs, or Generative Adversarial Networks, are a type of generative model which trains via a detective-and-forger-esque game, with the forger (generator model) attempting to create images from random noise which mimic those seen in the actual training set, and the detective (discriminator model) attempting to determine, given an image, whether that image is real or counterfeit. At the end of this game, the forger/generator model is producing images highly similar to those found in the training set, and has thus learned to generate novel artwork in the style which it has already seen.

Creating the Creator

Knowledge Distillation

To get started, we need to first create our AI artist and set up our GAN. An old deep learning trick for compressing models, i.e. taking a larger model and creating a smaller model which performs the same task with roughly the same proficiency, is that of knowledge distillation. In the context of supervised learning, this involves taking the larger “teacher network” and training a smaller “student network” on both the true labels from the training set as well as the teacher’s output labels — the student thus is given feedback on not only the ground truth, but also what the teacher thinks of each training example.

Generative “Distillation”

Our task is slightly different — rather than taking an image and categorizing it into one of many buckets, we instead seek to train a model which takes in a latent “noise” vector and turn it into an image. Thus, rather than training on teacher labels for an input, we instead simply use the teacher network to generate conditional examples for our student model, effectively collecting training data by querying the teacher model. Our basic data collection pipeline is as follows:

Generate a basket of prompt templates for Midjourney, describing the various characters and monsters we wish to be eventually generated by our model. For example,

“64-bit pixel art style, {species} monster wielding {equipment} over a dark background, full body visible”

Unfortunately, Midjourney doesn’t yet have a programmatic API, and the only way to interact with their model is through the Discord bot. Fortunately, Discord has a consistent interface which can be manually automated — that’s right, we used Hammerspoon to physically automate the tasks of copying a generated prompt, pasting it into Discord’s chat interface, and hitting “enter” to activate Midjourney’s generation cycle before starting the whole process all over again. Forty-thousand times. (Yes, we actually did this. And no it was not fun.)

The Student Becomes the Master

With the training data collected, we march onward to preprocessing! Species and equipment labels are parsed from the prompts associated with each image, and images themselves are split into 4 (Midjourney generates four at a time) and collated into tensor form for training.

Finally, we arrive at the training process. I won’t bore you with the model details here, but the deep learning enthusiasts among you might be keen to know that —

The architecture of the generator is that of an upsampling convolutional network, complete with residual blocks and the classic 3x3 convolutional filters all throughout.

The overall training pipeline is that of SNGAN (using the spectral norm as a regularization parameter on the discriminator, an alternative way of approximating the Lipshitz constraint introduced in the foundational WGAN work).

For more details (including training hyperparameters and samples), see our code repo, a modified version of SNGAN’s!

Adding in the Zero Knowledge Proofs

Now that we’ve completed setting up the SNGAN, we’re ready to dive into the details of the Zero Knowledge Proofs. To keep things simple, we will summarize the “what,” “why,” and “how” of our implementation.

What We Used

For all the jargon-lovers out there, we used the PSE fork of Halo2 with KZG commitments and recursively aggregated and proved our models using Axiom’s Halo2 recursive verifier.

If you don’t understand what any of this means, don’t worry! The bottom line here is that most modern zero-knowledge proving systems have a prover frontend, where the computation which is being proven (the generative model in this case) is encoded. Halo2 serves as this encoding layer, and additionally provides support for a KZG backend, where the cryptographic mechanisms which convert the frontend description of the model into a small proof which can be verified on-chain.

For those of you who are curious — a recursive verifier is a verifier whose execution is itself proven; a “proof of correct proof verification”, if you will. The reason for having such a step is that the initial proof size might be too large to directly send on-chain, and thus an intermediate compression step is necessary.

Why We Used It

Halo2 + KZG ended up being our proof system of choice for several reasons — firstly, the PSE team at the Ethereum Foundation, alongside many other contributors to the library, have done an exceptional job at creating helpful developer tooling and a flexible, helpful interface to write program specifications in. Moreover, Halo2 boasts several modern ZKP bells and whistles, including lookup tables, in which a verifier can enforce that certain values belong to a particular pre-agreed set, and rotations, in which a prover can reference data values from rows other than the one which a particular constraint applies to.

How We Used It

We drew heavily on gate designs and gadgets we’d created for our previous on-chain deep learning project, Leela vs. the World. Essentially, for each individual model operation (e.g. convolutional layer, activation functions, etc.), we painstakingly replicated these within both Rust and Halo2, and applied significant optimizations for the prover to run even faster and with less memory usage. For those of you who are curious about these gate designs, check out our open-source GitHub repository [3]

The Results

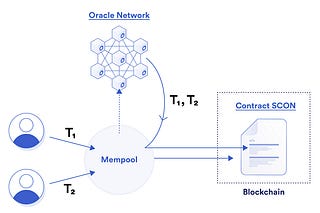

What was the end result of all this? Two things — firstly, a standalone verifier whose sole job is to strictly guard what is allowed to be posted on-chain: such a verifier only accepts proofs generated from the exact circuit which describes the generative model, and thus only outputs from such a model are allowed on-chain.

Secondly, a prover which both computes the generation process of the model and a proof of correct generation alongside this, such that both the art output from the model and the proof get submitted to the chain, with the former being minted as a real zkMon NFT if and only if the latter is accepted by the on-chain verifier.

Conclusion

And voila, welcome to a world of machine creativity, now authenticated with cryptographic proofs. A world where AI models stamp their outputs with digital signatures that are nearly impossible to fake.

One where we can fairly manage AI models, build autonomous collaborators, and of course, create AI based services (artistic or otherwise) that can never betray our trust — all made possible thanks to zero-knowledge cryptography.

Over the past couple months, everything from protocols just getting off the ground to services already supporting millions, frens across the ecosystem have come to us looking to integrate ZKML for themselves.

Unfortunately, we are, quite literally, at the limits of modern provers. Even as we eek out more algorithmic efficiency at every turn, it’s clear to us from our time building Rocky, Leela, and now, zkMon, that today’s proving paradigm has become a deafening ceiling to the ambition of projects we’re itching to build.

Everyone says that an image is worth a thousand words. But as it turns out, it’s actually worth ~1,500 lines of code, ~300,000 constraints, 300,000 gas, and a mind-blowing AWS bill.

The cost of intelligence is simply too high for scale…

For now.

About the Author

Daniel Shorr is the CEO and cofounder of Modulus Labs. He spends his time helping others surrender the power to secretly manipulate algorithms (read: zkml). Prior, he read a lot of scifi at Stanford.

Special thanks to Peiyuan Liao and the Polychain Monsters team.

References

[1] https://variant.fund/articles/nfts-make-the-internet-ownable/

[2] https://blog.simondlr.com/posts/decentralized-autonomous-artists