#17 - State of Modular Blockchains

A Paradigm That Brings Fast, Secure, and Composable Blockchains

Stanford Blockchain Review

Volume 2, Article No. 7

📚 Author: Roy Lu – HashKey Capital

🌟 Technical Prerequisite: Moderate

Introduction

Modular blockchains are a new paradigm in blockchain design. Instead of one monolithic blockchain performing all of the functions, layers in a modular blockchain specialize and optimize around a specific function. This architecture allows developers to provide a highly customized user experience for mass adoption.

The modular blockchain space has flourished, along the layers of Execution, Settlement, Consensus, and Data Availability, while opening doors to novel categories such as Rollup-as-a-Service and volitions.

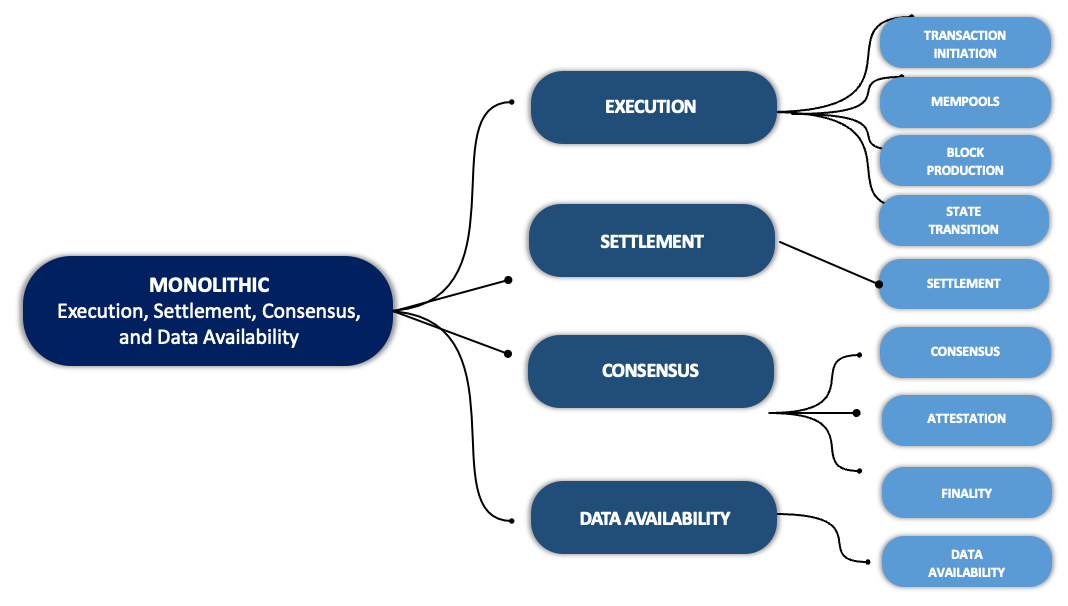

The four main layers of a blockchain are execution, settlement, consensus, and data availability.

However, this definition understates the full composability and extensibility of blockchains. A more detailed taxonomy shows that transaction initiation, mempools, block production, state transition, settlement, consensus, attestation, finality, and data availability, are each a meaningful layer of a blockchain that can be individually rearchitectured, scaled, and optimized.

For each of these layers, modular blockchain provides two main benefits: 1) Optimization through specialization, and 2) Composability. For example, centralized sequencers provide higher throughput on L2’s; ZK-rollups can provide faster time to finality on the settlement layer while maintaining security; and EIP 4844 proto-dank sharding expands the data availability layer on ethereum and gives optionalities to L2’s on where to store their data [1].

From Monolithic to Modular Blockchains: History Rhymes

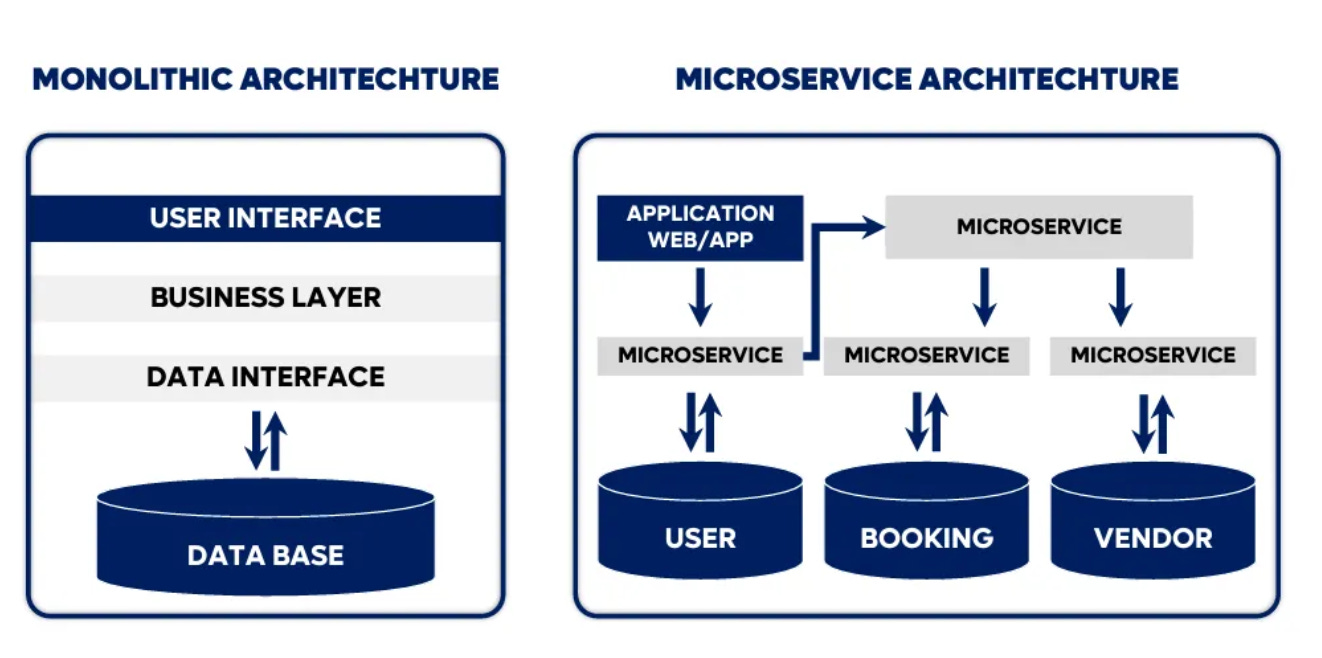

History doesn’t repeat itself, but rhymes. Web2 faced similar challenges with monolithic server architecture until the concept of microservices was introduced in 2011 at a workshop of software architects. Twelve years ago, web2 servers were monolithic — functionally disparate pieces of code sat in the same code base and were deployed and updated in tangent. This tight coupling caused problems in maintainability, availability, and scalability. As software teams grew larger, if one team made one mistake, the entire service would need to be updated. It also forced all software teams to move at the same pace for deployment.

By 2014, microservices had become popular enough to be considered for large enterprises. Using microservices, engineers can spin up multiple instances to service high loads. Maintenance is simpler because code updates only impact a particular microservice. Availability is higher overall with modular optimization. Nowadays, microservices have become standard in the tech industry.

The monolithic blockchain mirrors the monolithic server problem. To tackle it, several L1’s had sacrificed decentralization for scalability, while others attempted at changing the consensus algorithm to improve scalability. While these efforts have paid off in some cases, industry consensus is to solve through modularization - ‘separating concerns’ of different blockchain modules. The modular blockchain paradigm is a meaningful step forward to solving the blockchain trilemma and also provides composability to developers and a better experience for users.

Execution

What is execution?

Execution is composed of VMs (virtual machines) that facilitate state transitions. State transitions are made up of blocks of transactions. A modular execution layer increases scalability while keeping the same level of security and decentralization through the underlying settlement and consensus layers.

L2’s

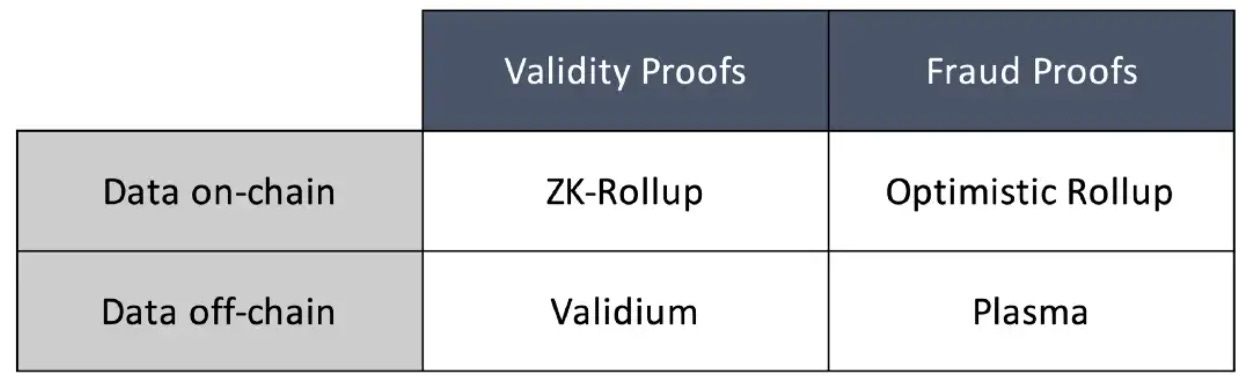

Amongst L2’s, there are four types of execution scalability solutions: Optimistic Rollup, Validity Rollup (aka ZK Rollups), Plasma, and Validum, which share comparable security levels to monolithic state transitions [2]. There are two other types of L2-like execution scalability solutions: side chains and state channels, which are limited in security or scope, making them less desirable.

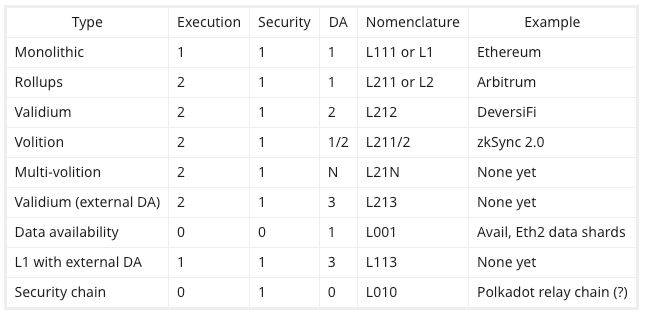

L2 rollups are similar to the idea of Danksharding [3], where state transitions are validated through core consensus and states stored on the DA layer of choice. Because of the similarities in design between L2’s and danksharding, the Ethereum community generally welcomes the adoption of L2’s. (Table from this Vitalik’s tweet and this post.)

Rollup-as-a-Service

Rollup-as-a-service (RaaS) emphasizes customizability, composability, and compatibility. As opposed to launching smart contracts, developers can launch their own rollups and customize the execution environment of their rollups.

Some RaaS projects even allow developers to choose their preferred consensus layer and DA layer, further extending customizability to cater to specific use cases.

State of Execution

Security

Security at the execution layer is a spectrum where ZK-based projects such as Scroll, Aztec, and Matter Labs have the strongest security guarantees with each transaction’s validity based on cryptographic proofs. Optimistic rollups and some RaaS with its own consensus layer derive security from their own crypto-economics. Long-term, zk-based projects will likely win out in security.

Time to Finality

Speed to finality determines how quickly a user can have their transaction confirmed. Theoretically, optimistic rollups and optimistic RaaS would inherit finality from their underlying consensus layer. Currently, Ethereum finality is ~15 mins, due to validators representing at least 2/3 of the total stake ETH on the network having to vote for the block in order for it to be considered finalized. At over 500,000 validators [4], the transmission of attestation induces substantial lag. This long finality means deposits into OP rollups take up to 15 minutes and withdrawal may take days due to fraud proofs.

Compatibility

Compatibility is low in the current fragmented landscape of virtual machines and languages. Popular VM’s such as EVM, Solana VM, and Move VM’s force developers to choose a particular runtime environment. To make matters worse, zkEVMs that aim to be EVM compatible are not truly compatible in execution environment, as evident in the invention of DSLs (domain specific languages) such as Starkware’s Cairo and Aztec’s Noir that worsen developer experience.

RaaS aims to solve this problem by allowing smart contracts to deploy once and run everywhere. However, some RaaS implementations cause further fragmentation of liquidity and dizzying deployment choices.

Customizability

With the advent of Rollup-as-a-service, customizability is fast improving for blockchain developers. Caldera provides near white-glove onboarding and rollup design service. Saga allows the creation of chainlets that removes the burden of having to recruit validators. Eclipse allows Solana contracts to easily redeploy into an EVM-compatible environment.

Furthermore, new categories such as volition are now available allowing developers optionality around the DA layer for gas cost optimization. Project teams can now choose to benefit from the security of L1’s while storing their data in a DA layer of choice.

DevEx (developer experience)

In general, devex at the execution layer leaves much to be desired. The portability of code is poor and redeployments are costly, as smart contracts require auditing when deployed on different VM’s. Auditing is expensive as is any potential vulnerability during redeployment.

Furthermore, zkEVMs are tiered into Type I, Type II, Type III, and Type IV (read Vitalik’s article here) [5]. However, many protocols claim a level of EVM compatibility above reality, leaving developers in a minefield of smart contract traps where their code doesn’t run as expected.

What’s next?

zkEVMs - in the short-term, Type 2 through Type 4 zkEVMs benefit from early adoption from faster go-to-market; however, in the long-run, Type 1 zkEVMs will provide the optimal devex, compatibility, and security. As Type 1 zkEVM code is mostly open source, the advancements in zkEVM will benefit the whole ecosystem.

RaaS with great PMF - there are overwhelmingly diverse flavors of RaaS, each with its own design pattern and specific tradeoffs. It is important that a great RaaS provide L1 security, meaningful liquidity, and better devex to lead to stronger product-market fit.

Settlement

What is settlement?

Settlement is a unique layer to rollups and doesn’t exist in monolithic chains. This layer anchors rollups to the base chain, establishes security, and provides objective finality if a dispute occurs on another chain.

The settlement layer usually consists of a smart contract on L1 that holds L2 transactions and state roots, a smart contract on L1 for bridging assets to and withdrawing assets from L2, and a sequencer on L2 that handles block production and message passing.

In the case of optimistic rollups, an additional smart contract is usually needed for fraud-proof arbitration. For zk rollups, the zk proofs are usually stored off-chain but an on-chain smart contract can be used to retrieve the zkp’s to validate message and transaction inclusion.

While all of this may be dense to digest, the key thing about the settlement layer is that it provides L1 security and L1 finality to L2’s. The security and finality provided by the settlement layer combined with the execution scaling on L2 together make modular blockchain the preferred paradigm for solving blockchain trilemma.

State of Settlement?

Finality

In the case of optimistic rollups, finality is assumed to be instant based on game theoretic security. Because users know that a dishonest validator would ultimately get slashed and they could therefore assume that a transaction is final upon processing, even though a transaction isn’t truly final until it’s settled in an L1 block. Furthermore, when disagreements arise, fraud-proof arbitration allows up to a week to resolve the dispute.

Fraud Proofs

Diving deeper, there are two types of fraud proofs: interactive and non-interactive. While non-interactive proofs can reduce the number of steps in dispute resolution. The size of transactions and complexity around smart contracts calling for L2 account states that aren’t available on L1’s make non-interactive proofs challenging and expensive to implement.

Validity Proofs

The power of validity proof is that you only need to prove it once and verify it once, compared to a massive consensus network with up to hundreds of thousands of nodes to reprocess the transaction for verification. Validity proofs can be highly efficient. This is because validity proofs can prove each step of the state machine execution, and on-chain verifiers only need to verify the proof without having to repeat the execution. Right now, proofing time and proofing costs are still quite high, but proving efficiencies are improving at a break-neck speed. And proving is very parallelizable so one could just aggregate a number of provers and process the job in parallel thereby increasing overall speed. Ultimately, validity proofs could improve L2 settlement finality asymptotically towards the speed of block production.

Censorship Resistance

Most L2’s have a single sequencer which is a point of potential censorship risk. To combat this risk, L2’s have implemented a separate transaction queue so that transactions must be handled even in the face of a rogue sequencer. However, such extreme measures may cause differences in L1 and L2 states and therefore not an ideal solution.

The challenge of decentralizing sequencers lies in the fact that additional crypto-economic incentives may be required to drive consensus amongst sequencers and performance gains of L2’s may be lost in the process.

What’s Next?

Decentralized Sequencers - much research has gone into decentralized sequencers that can remove the censorship risk and improve interoperability. Decentralized sequencers could enable atomic swaps and effective bridging between fragmented L2’s. However, in order to adopt decentralized sequencers, L2’s would likely need to give up their MEV incentives and sovereignty over their native bridges.

L2 Bridges - while L2’s scale transaction throughput, they also fragment liquidity and fragment on-chain states. L2 bridges can boost interoperability between L2’s and L1’s. However, the differing trust assumptions and a long time to finality make L2 bridges challenging to implement. An L2 bridge that solves the L2 bridge trilemma (trustlessness, extensibility, and generalizability) would add much needed interoperability to the modular blockchain landscape.

Consensus

“My goal: insulate the Ethereum base layer from centralizing tendencies of complicated stuff happening on top of / around Ethereum” - Vitalik Buterin, mev.day

Another way to look at the thought process behind modular blockchain is to scale the consensus layer to massive decentralization while insulating it from other modular layers with complexity in order to reduce security risks and censorship risks.

What is consensus?

Consensus layer is at the very core of blockchain technology. Blockchains are permissionless, censorship-resistant, and decentralized because of innovations in consensus. Without it, Byzantine Fault Tolerant replicated state machines wouldn’t exist, blockchains wouldn’t exist, period [6].

Consensus

Consensus is the mechanism through which state machines can agree on a single source of truth, even in adversarial environments. Flavors of consensus, such as Proof-of-Work, Proof-of-Stake, and Proof-of-History have been implemented to improve performance and reduce externalities. Most notable is the successful Merge of Ethereum, which was the software equivalent of moon landing. Through the Merge, Ethereum transitioned from PoW to PoS, making Ethereum deflationary, more energy efficient, and more decentralized. Amongst blockchains, PoS is the most common consensus mechanism.

Consensus Protocols

Consensus protocols are the layer that handles communication of block proposal and attestation across different validator nodes.

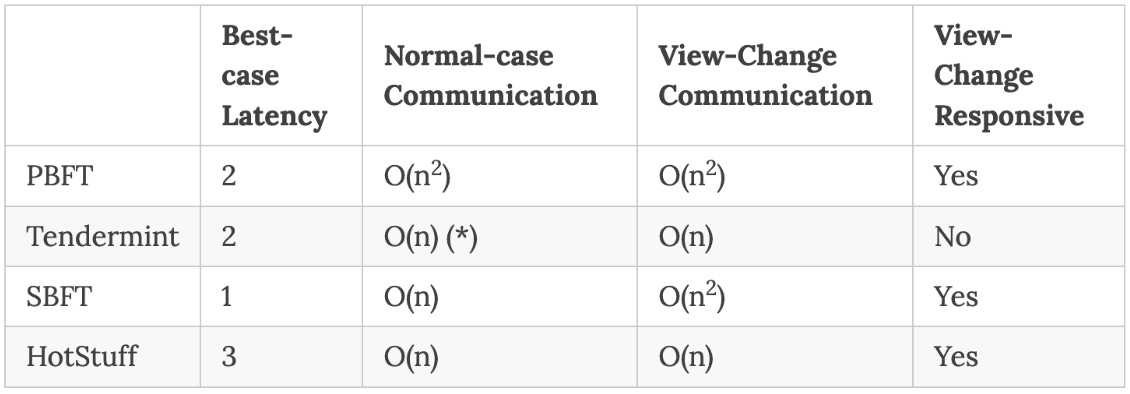

The protocol layer of consensus has also seen significant innovation from PBFT, to Tendermint, to HotStuff [7]. This evolution drove communication complexity from O(n2) to O(n). A reduction in complexity is critical to building a fully decentralized computer, as the number of nodes increases globally.

State of Consensus

The consensus layer exhibits strong network effects. A network of decentralized validators builds a strong defense against collusion and censorship. In PoS, a high staked value deters Byzantine attacks. These defensibilities are at the core of blockchain security.

We can basically equate the consensus layer to the so-called Layer 1’s, such as Ethereum, Solana, Binance Chain, Polygon, Sui, Aptos, all of Cosmos zones, and all of Polkadot’s parachains.

Decentralization

Decentralization protects against collusion and censorship. Depending on the protocol, an attacker would need to control 1/3+1 to 1/2+1 of a network’s nodes in order to override consensus. In terms of levels of decentralization, Ethereum currently has +500,000 validators, Cosmos 175, Binance Chain 29, and Polygon 100, indicating different levels of censorship resistance.

TVS Total Value Staked

Staking provides crypto-economic security against 51% attacks. In PoS model, validators must stake the protocol’s native currency to be eligible as a validator. Therefore, it requires significant capital to attack a network. Currently, about $38B is staked on Ethereum to secure the network. The actual cost of an attack, in an interesting twist, is much higher than 1/3 or 1/2 of the current staked amount. Because as an attacker adds staking to attack a network, the overall network TVS also increases.

Time to Finality and Throughput

Time to finality and throughput are inversely related to decentralization, which is why many L1’s chose to sacrifice decentralization for performance. Communication complexity of a decentralized network causes long finality and low throughput [8]. Although to be fair, throughput is also a function of block size and data availability, which we’ll cover in the next section.

Time to finality has a material impact on user experience. Remember the times when you visit a Starbucks and have to stand waiting for credit card terminal to complete your transaction? For me, that was always the annoying part of a purchase. Crypto faces this issue because of decentralization.

Liquidity

In the PoS model, stakers would put up native tokens to receive staking yields. The yield is a reward for taking on slashing risks in order to secure the network. However, once locked up, staked tokens lose their liquidity.

Liquid staking derivatives (LSDs) are a new primitive that solves the staking liquidity issue. Staking service providers would mint an LSD for staked tokens. These LSD’s can be used for swaps or borrowing collateral so that users may continue to make use of their liquidity despite staking.

What’s next?

More Performant Consensus Protocols - Currently, Ethereum has a minimum of 32 ETH to set up a validator. This minimum was set to prevent massive decentralization that hurts network performance. New consensus protocols could solve this problem by reducing communication complexity in a network.

Light clients - light clients can make the states from one protocol available on another protocol, thereby securing the communication between blockchains. Having access to states of origination blockchain on the destination blockchain is powerful in increasing interoperability. ZKP-based light clients have the potential to provide near-native security in cross-chain communications. This is an interesting area of active development and progress.

LSD Compounding - liquid staking derivatives bring fundamental liquidity to blockchains. Staking rates have become the new foundational yield basis in blockchains, similar to central banks setting interest rates. Holders of LSDs are entitled to the underlying yield. New ways to compound LSD yield will emerge.

DVT - decentralized validator technology (DVT) will further decentralize L1’s. Through DVT, stakers could put up native tokens below the validator threshold while enjoying the benefits of self-custody. Once the threshold is lowered, we could see a further boost in staking adoption, therefore further securing the network.

Data Availability

Blockchain could also ensure that a government agency or company verifiably published its data — and allow the public to access and confirm that the file they have is the same one that was signed and time-stamped by the creator - Brian Forde, senior advisor for data innovation in the Obama White House [9]

What is data availability?

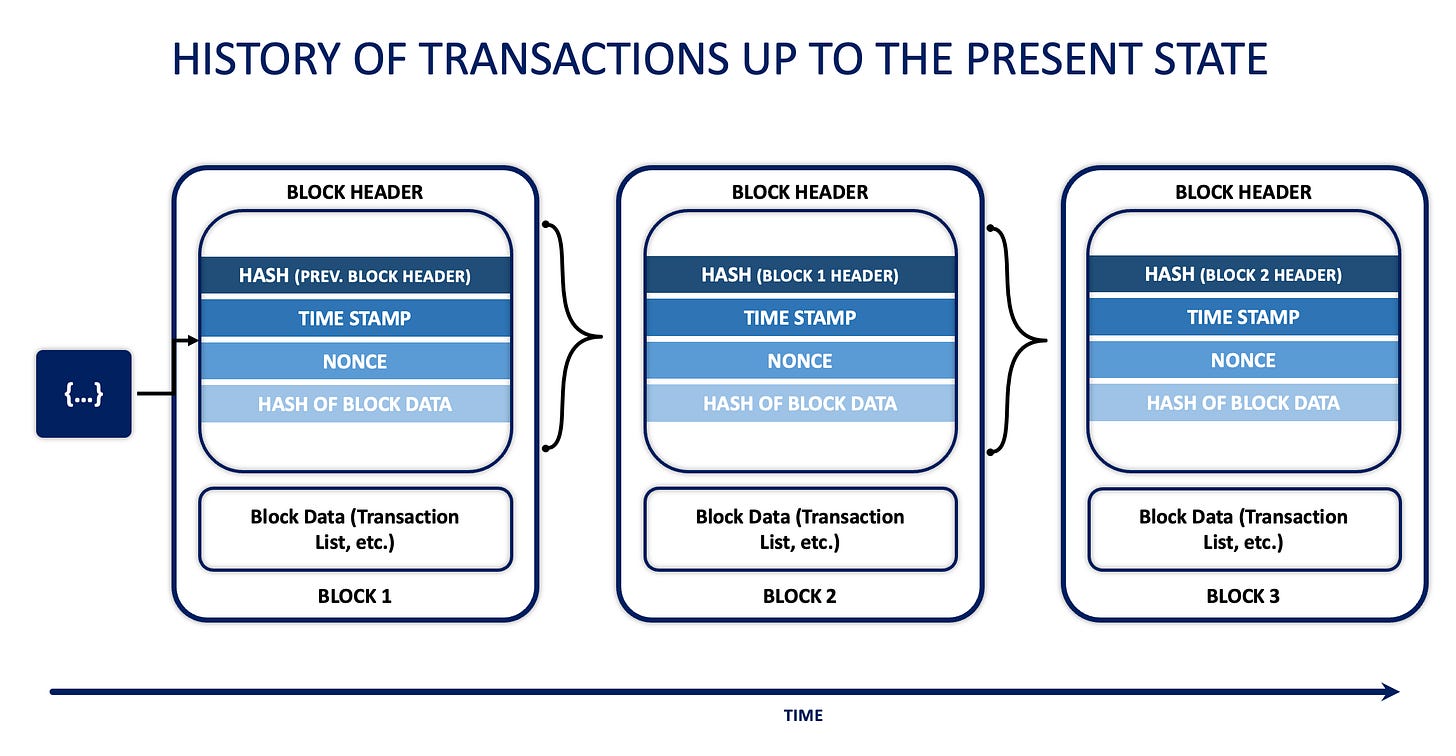

Data availability (DA) persists blockchains. Combined with decentralization, DA allows smart programs to run in perpetuity — literally run forever — because any node can enter and exit the network permissionlessly and catch up to the entire history of transactions up to the present state.

Data availability refers to the complete storage of the canonical chain — from the very first genesis block to the most recent block. Compared to the consensus layer, DA is much easier to secure because it requires only N=1 for replicated machines to recover. In other words, as long as one copy of the chain is kept, all other nodes rehydrate from that one copy.

State of DA

Cost

The vast majority of gas costs go to storing data on-chain. EIP4844 proto-dank sharding aims to increase the blob size. Ultimately, Vitalik envisions a future where only state roots are stored on chain, and detailed transaction data is stored on dedicated DA layers. ZK recursions are another approach to solving the DA cost problem.

Availability

Availability is the flipside of censorship. With publicly accessible DA, no one entity can censor data access. However, as blockchains are append only, the size of data will grow indefinitely, down-sampling technology and zk technology are used to keep storage requirements in check.

Completeness

Data attestations are important to ensure completeness. However, as only N=1 copy of data is required to ensure DA for a network, this problem is much easier to solve than consensus.

Privacy

Privacy must be balanced with accessibility and correctness. In this field, hardware encryption, zero-knowledge, and FHE (homomorphic encryption) are being explored as solutions to ensure privacy in a world of open data.

Correctness

Correctness is challenging to establish in an environment of trustlessness. Advancement in light client technology is an exciting field to guarantee correctness in cross-chain communication when access blockchain data.

What’s next?

Proto-danksharding - Proto-dansharding was proposed by Dankrad Feist and Proto Lambda in order to increase DA throughput by increasing the block size to about 1MB on Ethereum. The added block space goes into blobs, which L2’s can use to store their states and lower costs by up to 100x.

Volitions and adamantiums - volitions are L2’s that gives users the option to store their data on the L1 or another DA layer. For example, users could optionally store sensitive transaction details on another DA. To take this even further, adamantiums are L2’s that allow users to store transaction details on a private personal DA layer. Adamantiums do not exist yet but is an interesting concept to give users more sovereignty over their data.

ZK-Recursion - zk recursion techniques can drastically improve the throughput of DA layers and lower the cost of data storage, with security and verifiability. Theoretically, an L2’s can host L3’s, L4’s… so on and so forth and roll up all of the layers of transactions into a single block to settle on L1. This recursion technique powerful in extending blockchain scalability.

Conclusion

From monolithic to modular, blockchains have advanced towards solving the blockchain trilemma. The modular blockchain paradigm opens up the possibility of highly optimized blockchain layers that can serve the masses with great user experience.

With composability between each layer, a plethora of blockchain architectures are available to developers. The above table (from here) combines settlement and consensus into a single security layer, indicates choices of chains by numbers 1, 2, 3, or N for multiple chains, and demonstrates the variety of architectural combinations through nomenclature and type.

Separation of modules didn’t stop at execution, consensus, and data availability, other parts of the stack such as transaction initiation, sequencing, and block production are each being optimized and decentralized. This decentralization will continue to better protect blockchains from collusion and censorship. Furthermore, novel means of interoperability, architectural designs, and cryptography advancements continue to widen the aperture of what blockchains are capable of.

New technologies from the perspective modular blockchains are being introduced at break-neck speed, bringing us to the day that blockchain is ready for mass adoption with great user experience.

About the Author

Roy Lu is a Partner at HashKey Capital and leads the firm's venture capital investments based out of SF. Prior to HashKey, Roy was an investor at Lightspeed Ventures and Morpheus Ventures. Before venture, he was a founder, software engineer and biomedical researcher. Roy holds a MBA from Stanford Graduate School of Business. In his free time, Roy can be found surfing, foodying, golfing, and flying his drone.

References

[1] https://eips.ethereum.org/EIPS/eip-4844

[2] https://ethereum.org/nl/developers/docs/scaling/

[3] https://ethereum.org/en/roadmap/danksharding/

[4] https://ethereum.org/en/staking/

[5] https://vitalik.ca/general/2022/08/04/zkevm.html

[6] https://en.wikipedia.org/wiki/Byzantine_fault

[7] https://decentralizedthoughts.github.io/2019-06-23-what-is-the-difference-between/

[8] https://pontem.medium.com/a-detailed-guide-to-blockchain-speed-tps-vs-80c1d52402d0

[9] https://hbr.org/2017/03/using-blockchain-to-keep-public-data-public

very cool

hjkluil