#15 - Beyond Zero Knowledge Proofs

Understanding the Privacy Limits of ZKPs and Other Tech Solutions

Stanford Blockchain Review

Volume 2, Article No. 5

📚 Authors: Sean Pop, Sean Melcher, Guy Zyskind – Secret Labs

🌟 Technical Prerequisite: Moderate/Advanced

Introduction

The contagious excitement around the potential of zero-knowledge proofs (ZKPs) has put some people under a spell. For this camp, ZKPs feel like a cryptographic nirvana where there is no problem that ZKPs can’t solve.

ZKPs have been sold by some as a comprehensive privacy solution. That is simply inaccurate. The goal of this piece is to bring an honest assessment of what ZK can and can’t do - particularly when it comes to privacy. We will also examine complementary tooling that can be paired alongside ZK in order to harden ZKPs.

ZKPs have some wonderful strengths, but they also have limitations. It’s critical to understand both in order to escape the illusion of their omnipotence. Only when armed with that understanding can we responsibly include them in our toolbox for building.

ZKPs allow users to prove knowledge without revealing details

Zero knowledge proofs make it possible for users to prove they know something without revealing any details about it. For this to work, there must be a “prover/tester” and a “verifier”.

There’s a popular story of a cave that helps explain ZKPs:

Two friends, Alice and Jorge, find a cave. The cave has two paths in & out that lead to the middle, where there’s a door. There’s a code on this door that, supposedly, connects the two paths.

Jorge says he knows the code on the door. Alice would like to buy the code from him but wants proof that he knows it. Jorge can’t tell her the code straight away to prove it. So they both agree to a “zero knowledge” exchange test.

Alice will tell Jorge to enter the cave via one of the paths. If he really has the code, he’ll be able to exit via the other path.

Zero knowledge proofs are a cryptographic solution to this story. They leverage a sequencer to generate a “proof” that requires no knowledge exchange between the two parties.

ZKPs offer transactional privacy and scalability

One easy use case for ZKPs is identifying users and checking signatures. This fits solidly into its area of strength.

Projects like ZCash also leverage zero knowledge to offer transactional privacy.

ZKPs are well-known for the scalability they enable. A packet of information can be replaced by a lightweight “proof”, relieving blockchain congestion and speeding up transactions. This makes ZKPs suitable to build a highly scalable layer 1, or layer 2 scaling solution like zero-knowledge rollups.

ZKPs cannot accommodate secure computation or scalable privacy

ZKPs can fulfill the promise of privacy “locally” (at a transactional level). But they cannot keep secrets at a “global” (network level, application, multi-party) level.

For example, to make DeFi private, a user needs to be able to trade with a trustless agent—like a DEX based on smart contracts—while keeping their data private at all times. And this isn’t possible with ZKPs.

Privacy breaks down for ZKPs when we move from transactions into the realm of secure computation.

Guy Zyskind, CEO of Secret Labs, explains how this works in practice:

A centralized party (often called the sequencer) executes all transactions (and computations) off-chain. This means that clients interact directly with this sequencer instead of the blockchain and send it their non-encrypted input data. The sequencer, after running all computations, produces a succinct proof and sends it to the blockchain alongside the outputs (usually the updated state). The blockchain, which acts as the verifier, verifies that the proof is correct, and if so, applies the state changes without learning the clients’ data directly. All general-purpose blockchain ZK solutions use this scaling method.

It’s simple. If you can see your data, you can prove it. You can’t prove similarities or differences with another person’s data. Who or what does that? The sequencer generates a proof over all participant data.

But if a user needs to trust an off-chain, centralized sequencer with their data… we’re back to the basic problem of Web2.

There are also theoretical concerns about information leakage on the infrastructure layer. For example, ZKPs don’t protect against transaction size analysis or various other forms of metadata leakage, which could potentially reveal information about ZKP transactions.

In short, ZKPs are great for keeping secrets locally (like a peer-to-peer transaction). But they can’t keep that same data private on a global/network scale.

This is, in large part, because zero knowledge proofs can’t make a smart contract or a DEX private. And why, unfortunately, ZKPs aren’t a fix-all for privacy.

How do the strengths and limits of ZKPs stack up against other privacy solutions?

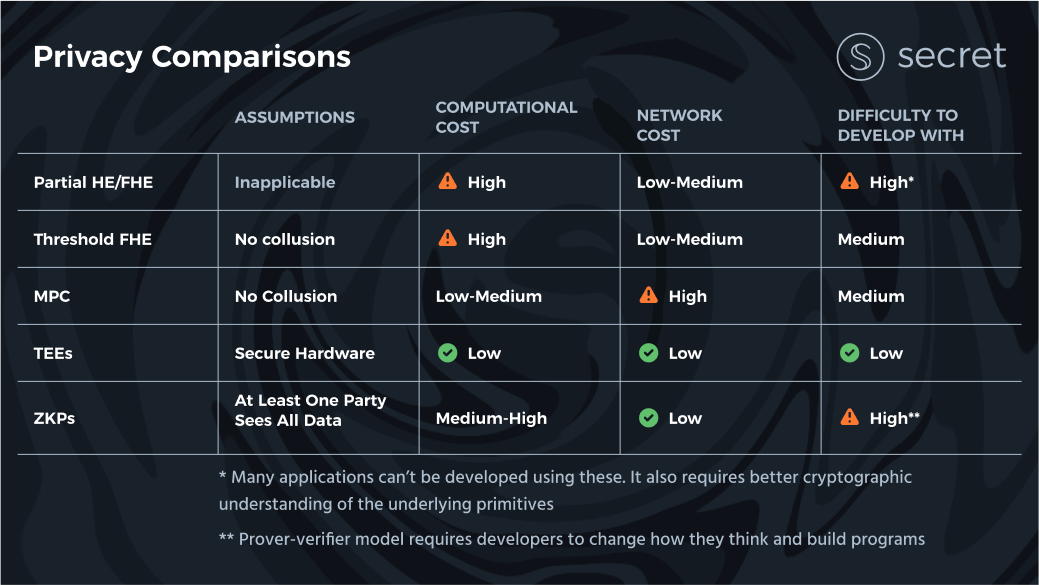

In cryptography, there’s rarely an “optimal” method. Every solution mentioned here has multiple constructions and can be used alone or in tandem with other solutions to solve interesting problems on a case-by-case basis. As shown in the graphic below, each construction and combination comes with trade-offs and relative advantages.

Fully Homomorphic Encryption computes over encrypted data without the need to decrypt

Fully homomorphic encryption (FHE) is an idea that’s easy to understand but extremely hard to implement.

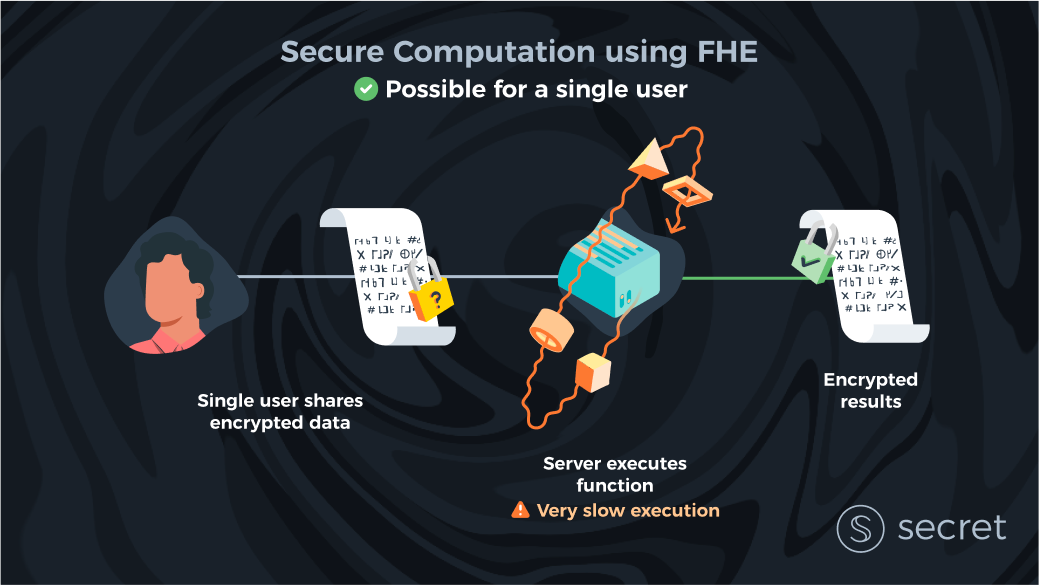

Imagine a world in which there is a single user and only one server. The server is not privacy-preserving. How do we solve for privacy?

With FHE a user can send encrypted data to the server and the server computes over her data without the need to view it in raw.

FHE is the most inefficient of all existing privacy technologies

Unfortunately, FHE, to date, has been extremely inefficient. FHE is, in fact, the slowest of all privacy-preserving technologies we know. However, the efficiency of FHE computation has been improving at an incredible rate, and specialized hardware (CPU → GPU → FPGA → ASICs) will improve computation speed further by several orders of magnitude in the next decade.

But even in 5-10 years, it’s most likely that using FHE capabilities will only make sense for certain use cases or for certain parts of smart contract execution. For example, you may want to use FHE to store and operate on extremely sensitive (and small) data, like cryptographic keys or SSNs.

FHE is unable to compute over data encrypted with different keys

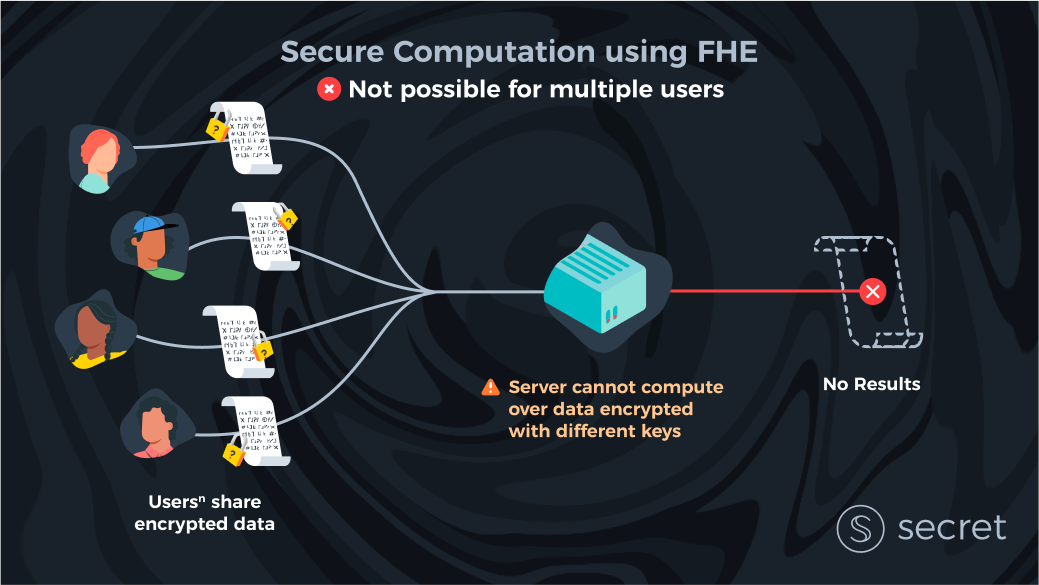

How do we introduce a second client to this secure computation problem?

Let’s imagine there are only two clients in the world. Both clients have their own input and want to compute a function over their inputs. Both want privacy.

With ‘pure’ FHE, it’s unclear how to do this as each client used their own key to encrypt their data. FHE is unable to compute over data encrypted with different keys. So how do we solve this?

Partial Homomorphic Encryption (PHE) cannot support general smart contracts

It turns out that many methods need to be used to solve this problem. In addition to fully homomorphic encryption, which can compute any arbitrary function over encrypted data, there are many partially homomorphic encryption schemes (HE or PHE). These partial schemes can compute specific functionalities—usually either addition or multiplication—but not both (note that all functions can be reduced to addition and multiplication operations). These partial HE schemes were invented decades ago and are much more efficient, and they may be sufficient for a limited set of use cases, but they are not sufficient for the general case of secure computation.

Several networks aim to use such a partial scheme like PHE to achieve privacy (e.g., Penumbra and Dero). However, it is important to understand that they cannot, by definition, support general-purpose smart contracts. The applications of these techniques for privacy-focused blockchains is novel, but the use cases it can support are quite limited.

There are non-trivial ways to extend the range of applications you can support with a partial solution like this, but that would require deep expertise in cryptography. These applications often bring other hidden trade-offs that are hard to reason about. For that reason, PHE will likely never allow developers to build arbitrary applications with privacy.

So if FHE or partial HE solutions alone can’t solve the multi-client secure computation problem… what can?

Secure Multi-Party Computation (MPC) distributes computation across a system

MPC is a distributed systems approach to computing over private data. In that sense, its a natural fit with the basic architecture of blockchains.

In MPC, instead of trusting a single server, we have several untrusted servers that jointly run a computation over client data. Changing the trust assumptions greatly increases the solution space. MPC solutions are developed with multiple clients in mind. So there are no theoretical problems with servers combining data from multiple users (unlike FHE).

Interestingly though, MPC can be combined with FHE to create a “Threshold FHE”. Threshold FHE splits a single FHE encryption key into shares between all the servers.

MPC relies on security by non-collusion

Using FHE (alone) allows the user to keep her secret encryption key without the server ever getting access to her data.

But with MPC, if all servers collude, they can reconstruct the private data. As long as one server is honest, this collusion is avoided and the model is secure.

It’s important to note at this point that MPC solutions don’t contain keys. Instead, MPC hides the data by giving each server an “encrypted” share of the data so that you’d need all shares to ‘“decrypt” the data. Additionally, each share alone does not reveal anything about the plaintext data.

In practice, one could tailor the collusion thresholds to optimize for either liveness or privacy. In the above example, where all key shares are required to reconstruct the data, we obtain maximum privacy.

However, it only takes a single server to mount a denial of service (DoS) attack against the entire system. This is because it takes only one server not responding to a client’s request, and the result can’t be computed.

On the other hand, we could require only ½ or ⅔ of the key shares. This would require the majority of servers to be honest and not collude while executing the computation.

With MPC, privacy is more difficult to solve than correctness

Correctness is verifiable—if a transaction is tampered with, this is either seen or consensus even stops. However, one cannot verify collusion attacks on privacy, making it a ‘silent’ attack.

If servers colluded (outside the system) by sharing keys, we would never know that they could decrypt the data. To eliminate this attack vector, we can aim to have as many servers as possible run the computations thereby avoiding collusion attacks.

Sadly, MPC scales poorly with the number of parties and increasing the number of servers hinders liveness. It therefore seems that MPC alone is not enough and other technologies that make collusion hard need to be implemented.

TEEs can complicate the collusion attack vector for MPC

One way to make MPC collusion more difficult is to force all servers in the distributed system to use Trusted Execution Environments (more on this below).

Alternatively, one can complicate collusion by working in a permissioned setting, where servers are (partly) trusted and can be re-identified if a data leakage occurs. This is the direction Partisia Blockchain appears to be taking: an off-chain set of semi-trusted nodes execute private computations, and the blockchain only stores and verifies the state.

Trusted Execution Environment (TEE) separates computation from the rest of the CPU

TEE moves processing to a “black box” so that it is inaccessible to the CPU of a computer. This region can store data (like encryption keys) and run computations that cannot be tampered with by the host of the machine. Also, the host cannot extract any data stored in that region (at least, in theory).

TEEs can solve for privacy and correctness by requiring our server (or in the blockchain setting, servers) to execute all computations inside their TEE. In addition, we can ask clients to encrypt their input data using a key that’s also only available in the servers’ TEEs.

With this design, no one—not even the host who owns the servers— has access to the clients’ data. This is because data is only ever decrypted and computed over inside the TEE. For more information on how such a system works in practice and how encryption keys are kept safe, take a look at the Secret Network technical documentation.

Another way to think about TEEs is as a hardware approximation of the simple world we imagined earlier (with a single user and a single trusted server that can run computations both correctly and privately). However, making the most out of TEEs is much more complicated than that world. To prevent censorship or DoS attacks, it’s still best to use TEEs in a distributed system like blockchain instead of relying on a single server.

Because TEE systems obtain security via hardware reliance as opposed to pure cryptography, they are very efficient. While cryptographic solutions are generally orders of magnitude slower than computing in the clear, in most cases TEEs have less than 40% overhead in computation time. This mainly comes from the requirement to decrypt input and re-encrypt output to preserve privacy.

The main drawback with TEEs that worries users is the possibility of side-channel attacks. In recent years, researchers have shown how they can extract information from TEEs mostly using speculative execution, a method used by all modern processors to gain efficiency. While most of these problems can be solved in software or hardware and are difficult to exploit in practice, they do present a disadvantage compared to pure cryptographic techniques on this specific vector of attack. Architectural bugs (which can impact any type of hardware, not just secure enclaves) are also possible and pose a serious risk, which should not be ignored.

With these tradeoffs considered, TEEs still currently stand as the best practical solution, especially for high performance computations on low sensitivity data. Even when the core of a potential solution is cryptographic in nature, TEEs are key to enhancing security and can help prevent collusion while boosting scalability.

ZKPs are “hardened” when combined with additional blockchain privacy solutions

For example, a builder might combine ZKPs with multi-party computation to build a fully private application. There are also hardware-based solutions (trusted execution environments, TEEs)—although you probably don’t need additional ZKPs when using TEEs.

Multi-party computation makes up for ZKPs shortcomings by enabling computation over encrypted i.e. private data. Hence, you can keep data private at the smart contract level.

In this example, the internal state of a DEX could be encrypted, with MPC allowing you to perform computations over the data and update it without ever decrypting it. This keeps the data private at all times.

Ultimately, ZKP can help in its areas of strength: verifying user identity, signing, and lightweight transactions.

The future of Web3 privacy isn’t one-size-fits-all

As we have seen through this discussion, every privacy solution has its trade-offs. And no matter what you might have heard, there’s no single solution that can address all our privacy needs and has zero risk.

Building responsibly with any privacy technology means understanding its inherent limitations.

When these are clearly understood, we can reach into a broader privacy toolbox and, potentially, pair that technology with a solution that complements its respective weaknesses. ZKPs, TEEs, MPC, and FHE all bring something to the table with strengths and limitations.

On-chain privacy should be “normal” for users. Building towards that vision means being open to a variety of privacy tools instead of settling into maxi-mentality around a single solution.

About the Authors

Secret is building a constellation of privacy solutions for builders and users: Secret 2.0. Our vision is to allow anyone to build generalizable and composable decentralized applications with programmable privacy.

Sean is the Co-Founder of Secret Agency DAO and an educational creator for the network. Guy Zyskind is the Founder of Secret, CEO of Secret Labs, and brought the world’s first private smart contracts to mainnet for Secret in 2020. As a former researcher at MIT, he is the author of some of the most highly cited blockchain privacy papers (including “Decentralizing Privacy”, written in 2015)

Join Secret University for free online Rust training: https://scrt.university

Explore HackSecret videos and resources: https://scrt.network/hacksecret-2023

References

[1] https://scrt.network/blog/beyond-zk-guide-to-web3-privacy-part-1/

[2] https://scrt.network/blog/beyond-zk-guide-to-web3-privacy-part-2

[3] https://homepage.cs.uiowa.edu/~ghosh/blockchain.pdf

[4] What Zero Knowledge Proofs (ZKPs) Can and Can't Do

I’m working on a Zero knowledge proof project that uses AI for managing this system any recommendations?

https://zhtp.net/

hjgjfk